The rapid growth of artificial intelligence is characterised not only by complex models, but also by a steady shift in computing power.

CTO

While many applications in recent years have relied on the data centres of large cloud providers, innovations in hardware and model compression techniques mean that more and more tasks are being performed directly on devices. This white paper describes the key technological and economic drivers behind this development, provides an overview of the underlying concepts and explains the implications for businesses, investors and developers.

Rise of specialised AI hardware

Neural computers and chips for local AI

For several years now, processor manufacturers have been integrating dedicated accelerators to run neural networks more efficiently. Apple leads the field with its M series: the M4 processor, unveiled in spring 2024, contains a ‘neural engine’ that, according to the company, enables up to 38 trillion calculations per second, giving it more computing power than the NPUs of many AI PCs[1]. In addition to classic CPU and GPU cores, this special unit also supports machine learning functions and, together with faster memory, ensures that the chip is particularly suitable for artificial intelligence applications[1]. Such integrated accelerators are not only found in tablets and smartphones, but increasingly also in notebooks and desktop systems.

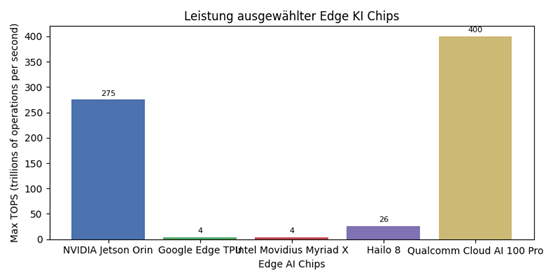

A look at the market for edge chips shows a significant increase in performance. An analysis by the research platform AIMultiple lists various edge processors with their maximum computing power (TOPS). According to this overview, the Jetson Orin achieves up to 275 TOPS, while Google's Edge TPU and Intel's Movidius Myriad X each deliver four TOPS. Specialised chips such as the Hailo 8 deliver 26 TOPS with very low energy consumption, and Qualcomm's Cloud AI 100 Pro can provide up to 400 TOPS depending on the configuration level[2]. This high computing capacity, combined with low wattage, facilitates the use of sophisticated models on robots, cameras or vehicles.

From the data centre back to the device

The more powerful chips are made possible by advanced manufacturing processes and optimised architectures. Compared to previous generations, the M4 processor can deliver higher performance per watt thanks to a finer structure width and more efficient memory connectivity. Other manufacturers are pursuing similar strategies: Intel's upcoming Lunar Lake platform is expected to deliver over 100 TOPS of AI performance, with a large portion coming directly from the NPU. Combined with modern wireless technologies such as 5G, this reduces the latency between sensor data and evaluation, enabling real-time applications in industrial environments. The integration of such chips into PCs is leading to a new class of devices that Microsoft is promoting as ‘Copilot PCs’ because they can perform generative functions offline.

Concepts and methods of localised models

Why compression is necessary

A major obstacle to local AI applications is the size of current models. Many generative language models consist of several billion parameters, which requires considerable storage space and high computing power. A recent scientific study highlights that the shift of AI models to the edge is made possible by three areas of optimisation: data optimisation, model optimisation and system optimisation[3]. The authors emphasise that the increasing amount of data on end devices and the need for local processing require efficient models[3].

Model optimisation methods

Developers use two approaches to adapt models for devices with limited resources: designing compact architectures and model compression. Compact architectures such as MobileNets or EfficientNet are already optimised for efficiency during development and deliver solid results in mobile vision applications with a minimum number of parameters[4]. In addition, existing models can be reduced in size through compression. The methods described in the cited study include removing irrelevant weights (pruning), sharing parameters, reducing accuracy through quantisation, transferring knowledge from a large model to a smaller one via ‘knowledge distillation,’ and low-rank factorisation[5]. By combining these techniques, neural networks can be significantly reduced in size without greatly compromising accuracy[6]. The authors also cite examples such as ‘deep compression,’ in which multiple methods are used simultaneously to massively reduce the memory requirements of networks[7].

Lightweight multimodal models

An important trend is that multimodal models can run on the device. In its technical report, the team behind the Octopus v3 model presents a compact multimodal network that requires less than a billion parameters and can process both speech and images thanks to special ‘functional tokens’[8]. Due to its small size, the model works on devices with limited memory, such as a Raspberry Pi [8]. Such developments show that generative and multimodal capabilities are not necessarily tied to large cloud infrastructures.

Use cases and new product classes

Autonomous systems and smart factories

Edge AI is used where low latency and independence from the network are required. The aforementioned Edge AI study cites autonomous vehicles, virtual reality games, smart factories, surveillance cameras and wearable health devices as typical applications[9]. By processing sensor data immediately, autonomous vehicles can react quickly, while smart factories can implement flexible production processes. Wearables analyse vital data without a constant connection to the internet and still offer personalised evaluations.

Edge AI devices such as MAVERICKAI

The MAVERICKAI laptop developed by AMERIA represents a special innovation. The device is a revolutionary laptop that makes artificial intelligence accessible to everyone. It combines a personal assistant function and a platform for interacting with three-dimensional content without the need for additional devices such as VR glasses[10]. Users can communicate with the integrated assistant via voice input. The system orchestrates several AI services in the background [11]. Thanks to motion and object recognition in three-dimensional space, the device can be operated without a keyboard or mouse. The user interface, which can be used both privately and in everyday working life, is thus completely virtualised [12]. In future, even surgical procedures could be supported by integrating the laptop with operating theatre data [13]. These examples show that innovative hardware paired with local intelligence is giving rise to entirely new product categories.

Industrial examples of multimodal on-device models

The increasing performance of compact models enables further demonstrations. The Octopus v3 model, which runs on small devices as mentioned above, illustrates how multimodal agents can perform tasks that were previously reserved for large LLMs. Further announcements, for example from Google and Qualcomm, show that smartphones and PCs will soon be able to process multimodal inputs such as text, images and audio locally. These capabilities pave the way for applications such as advanced personal assistants, translating augmented reality glasses and intelligent robotics systems.

Impact on infrastructure, business models and data protection

Changed cloud infrastructure

When more data is processed locally, the need for a constant connection to cloud data centres is reduced. The edge research reports cited highlight that local execution leads to faster decisions, lower bandwidth consumption and improved reliability [9]. At the same time, new challenges arise: models must be managed in a distributed manner, and integration into enterprise-wide architectures requires sophisticated placement, migration and scaling strategies [14]. Despite the shift, close integration of cloud and edge systems will remain necessary to aggregate training data, roll out updates and cushion peak loads.

Security and privacy

The processing of sensitive data on devices increases the requirements for protection mechanisms. According to the Edge AI Survey, the distributed nature of edge computing requires new security concepts. Data anonymisation, trusted execution environments, homomorphic encryption and secure multi-party computation are proposed [15]. Federated learning is another key concept: models are trained on a variety of devices without raw data leaving the device [16]. In the future, blockchain technologies could also be used to make the exchange and evaluation of data decentralised and tamper-proof [17].

Economic implications

The shift of intelligence to the device itself is changing business models. Semiconductor manufacturers are benefiting from the demand for specialised processors and NPUs. Cloud service providers need to redefine their role and offer additional edge services. At the same time, new sources of revenue are emerging in the area of hardware-based subscription models, where software functions are tied to specific chips. End users benefit from lower latency and improved availability. Companies can meet stricter data protection requirements and better comply with local compliance rules.

Recommendations for decision-makers and investors

Pilot projects for edge AI

Companies should initiate pilot projects at an early stage to gain experience with edge technologies. Areas of application with high latency requirements, such as quality control in production or autonomous logistics, are particularly suitable. It is advisable to start with small, self-contained tasks and analyse the results systematically. Investments in expertise in quantisation, distillation and other compression methods are necessary in order to successfully adapt proprietary models.

Selecting suitable hardware

When selecting hardware, it is important to consider not only the total TOPS, but also energy efficiency, software stack support and availability. The Jetson Orin, for example, is designed for robotics and autonomous systems and offers up to 275 TOPS at 10–60 watts. Google's Edge TPU is suitable for IoT applications with very low energy consumption, while the Hailo 8 provides a good balance of performance and efficiency for smart cameras [18]. For high-performance scenarios, companies can turn to chips such as Qualcomm's Cloud AI 100 Pro, which achieves up to 400 TOPS [18]. It is important to consider the software ecosystems of the providers and the support of frameworks such as PyTorch Mobile or TensorFlow Lite.

Planning infrastructure and security

Edge projects require careful infrastructure planning. This includes managing distributed models, mechanisms for updating models over insecure networks, and strategies for migrating between nodes [14]. In addition, companies should invest in security mechanisms at an early stage, such as trusted execution environments or homomorphic encryption [15]. Implementing federated learning can help comply with data protection regulations while benefiting from distributed data [16].

Outlook for investors

Investors in the semiconductor and infrastructure sectors should keep a close eye on market opportunities in the edge segment. Demand for NPU-enabled processors will increase thanks to new product categories such as MAVERICKAI and industrial applications. Investments in manufacturers of energy-saving chips, software providers for model compression and companies with expertise in federated learning platforms could pay off. It is also worth taking a look at start-ups that develop multimodal models specifically for use on edge devices, such as the research presented in Octopus v3[8].

Conclusion

The trend towards local processing of AI models is unmistakable. Advances in specialised chips that deliver hundreds of TOPS and methods for reducing model size make it possible to run demanding applications without a permanent cloud connection. Products such as MAVERICKAI and research findings on multimodal compact models demonstrate that new markets and usage scenarios are opening up. For companies, this development presents both opportunities and challenges: they can offer data protection-friendly and low-latency services, but at the same time they must invest in hardware, model optimisation and security measures. Investors benefit from growing demand in the semiconductor sector and from new business models arising from the increasing self-sufficiency of devices. The future of artificial intelligence will thus be more decentralised.

Performance of selected edge AI chips

Fig. 1: Comparison of the maximum computing power of selected edge chips. Data according to AIMultiple Research 2025 [2].

[1] Apple introduces M4 chip - Apple

https://www.apple.com/newsroom/2024/05/apple-introduces-m4-chip/

[2] [18] Top 20 AI Chip Makers: NVIDIA & Its Competitors in 2025

https://research.aimultiple.com/ai-chip-makers/

[3] [4] [5] [6] [7] [9] [14] [15] [16] [17] Optimizing Edge AI: A Comprehensive Survey on Data, Model, and System Strategies

https://arxiv.org/html/2501.03265v1

[8] Paper page - Octopus v3: Technical Report for On-device Sub-billion Multimodal AI Agent

https://huggingface.co/papers/2404.11459

[10] [11] [12] [13] AMERIA AG | MAVERICK AI | Our journey towards a Touchfree future for everyone.

https://www.ameria.com/maverickai

AMERIA is a leading European deep-tech company based in Heidelberg, is shaping the future of human-machine interaction through groundbreaking digital technologies. With MAVERICKAI, AMERIA is developing the first true AI device built on a laptop platform – a combination of smart software, a revolutionary AI assistant, and a sleek form factor.

Recent news and press articles

Contact our press team.

AMERIA is a leading European deep-tech company based in Heidelberg, is shaping the future of human-machine interaction through groundbreaking digital technologies. With MAVERICKAI, AMERIA is developing the first true AI device built on a laptop platform – a combination of smart software, a revolutionary AI assistant, and a sleek form factor.

.jpg)